Are You Using The Right Cache?

- 3558 words

- 18 min

Caching can be used to boost performance and improve the resiliency of a microservice system.

Caching, though, comes in many flavours: local, distributed, hierarchical - which one should you use?

"It depends!" screams a senior developer in the back of the room.

This article provides an in-depth overview of the pros and cons of several caching strategies to help you make an informed decision within the constraints of your own system.

Table of Contents

Why Do We Need A Cache?

Before discussing the solution, let's look again at the problem: what issues does caching solve in a microservice architecture?

Here we use a bit of probability theory to build the case for caching in a deep microservice architecture.

If you have heard all this stuff before, feel free to skip this section.

A Primer On Microservices

A microservice architecture can help an organization to move faster.

Complex business flows are decomposed into smaller domains, owned by different teams.

They interact with each other via APIs and, as long as the API contracts remain stable, there is no need to coordinate - teams can iterate faster within their ownership boundaries.

Complexity does not disappear though, it just moves elsewhere.

Every time we break down a domain we add one or more new microservices.

What was previously accomplished within a single application now requires an additional request over the network.

Each of those requests introduces new failure modes, impacts the overall availability and performance profile of the system.

The Cost Of A Remote Procedural Call

Let's consider a simple microservice system as a toy model:

We have an API exposed to the Internet, Edge API.

Every time Edge API is called by an end-user to perform an operation, Edge API perform two additional requests over the network: one to A, one to B.

A is not self-sufficient either: it needs to call C and D to fulfill the task it received by Edge API.

Let's look, now, at how those remote procedural calls (RPC) impact the availability and the performance characteristics of the system.

Availability

Definition

We define availability as the ratio of well-formed requests handled successfully by a server1:

$$ \text{Availability} = \frac{\text{# successful requests}}{\text{# incoming well-formed requests}} $$

E.g. if 99 requests are successful out of 100, our server has an availability of 0.99.

You will often find availability expressed as a percentage - in the very same case, we'd say that the server has 99% availability.

The Impact Of RPCs On Availability

Even if there are no RPCs we still cannot expect 100% availability from an API: the underlying machine might abruptly shut down, it might run out of RAM, there might be a power loss or a fire in your data center, etc.

$p_f$ is the probability of failure for each component in our toy model when handling a request in isolation ($0 < p_f < 1$).

$p_s$, instead, is their probability of success in isolation.

We have:

$$p_s = 1 - p_f$$

If a service has no dependencies (e.g. C) then $p_s$ is exactly its availability.

For example, if $p_s=0.9999$, we have 4 nines of availability - 99.99% of incoming requests are handled successfully.

Let's look at a service with some dependencies - A, for example.

A handles an incoming request successfully if and only if2:

- the call to

Csucceeds; - the call to

Dsucceeds; Adoes not experience an intrinsic failure.

If you run the math using the law of total probability and assuming that the outcomes of all those events are independent from each other3, you'll find that A's availability is equal to $p_s^3$.

What about Edge API?

You can prove that the availability of a service in our toy model is $p_s^{n+1}$ where $n$ is the number of its direct or indirect dependencies.

For Edge API, $p_s^5$.

Is that good? Is that bad?

Let's do the math for a few different values of $p_s$ and $n$.

| n=0 | n=1 | n=2 | n=5 | n=10 | |

|---|---|---|---|---|---|

| $p_s=0.99999$ | 99.999% | 99.998% | 99.997% | 99.994% | 99.989% |

| $p_s=0.9999$ | 99.99% | 99.98% | 99.97% | 99.94% | 99.89% |

| $p_s=0.9995$ | 99.95% | 99.90% | 99.85% | 99.70% | 99.45% |

| $p_s=0.9990$ | 99.90% | 99.80% | 99.70% | 99.40% | 98.91% |

| $p_s=0.9950$ | 99.50% | 99.00% | 98.51% | 97.04% | 94.64% |

If we assume a constant request rate we can convert those availabilities into a downtime budget: how long can your service be down?

If you are running a service with 99.99% availability you cannot afford more than 52 minutes of downtime per year.

If your Edge API relies on 10 dependencies, then each component in the system needs to have 99.999% availability to offer 99.99% availability to your customers - your internal APIs can only afford 5 minutes of downtime over a whole year!

They need to be an order of magnitude more reliable.

That has massive impact on your service level.

Four nines of availability are already extremely challenging - that's AWS offers for its compute offering, AWS ECS.

Five nines? Unlikely you will pull it off without a massive investment of money, energy and effort.

There is another phenomenon worth pointing out: it only takes one badly-behaved dependency to massively impact the availability of your edge services.

What happens if one of those 10 dependencies offers 99.5% availability instead of 99.99%? Your availability at the edge becomes 99.4%!

In other words, you are only as strong as the weakest link the chain.

Caching Breaks The Dependency Chain

What if we introduce a cache? Does that help?

Let's focus on Edge API and A.

We introduce a client-side cache in Edge API to store the responses it received by A.

In pseudo-code,

if cache_hit(request):

return get_from_cache(request)

else:

response = call_a(request)

set_cache_in_background(response)

return response

How does this impact Edge API's availability?

Assuming A is the only dependency, a call to Edge API succeeds if:

- the response is cached or the call to

Asucceeds; Edge APIdoes not experience an intrinsic failure.

Let's call $p_{hit}$ the probability of a cache hit.

Then Edge API's availability is4

$$p_s[p_{hit}+(1-p_{hit})p_s]$$

Let's assume $p_s=0.9999$.

If we didn't have a cache, Edge API's availability would be 99.980%.

With a 50% chance to find a response in the cache, Edge API's availability becomes 99.985%.

With a 90% chance to find a response in the cache, Edge API's availability becomes 99.989%.

In other words, if our cache hit ratio is high, Edge API's availability is barely affected by having A as a dependency (99.99% vs 99.989%).

Let's go back to our original model.

If we cache A's responses, we are not just skipping a call to A. We are also avoiding a call to C and D.

This reduces the number of effective dependencies of Edge API from 5 to 1.

Our availability jumps to 99.98% instead of 99.94%, a massive improvement!

Latency

Latency Percentiles

Let's look at another important property of our system - latency.

How long does it take to respond to a request?

Each request will take a different time to complete - we are interested in a few aggregated indicators to determine how the system is doing as whole.

We will usually try to measure the median response time and the distribution of long-tail latencies.

If the median response time of our system is 67 milliseconds then 50% of incoming requests are fulfilled in less than 67 milliseconds.

When talking about long-tail latencies we will look at latency percentiles - p90, p95, p99.

Saying that the p99 of our system is 162 milliseconds implies than 99% of incoming requests are fulfilled in less than 162 milliseconds.5

The Impact Of RPCs On Latency

Let's assume that requests to dependencies are not fired in parallel (i.e. Edge API waits for A to respond before calling B).

How does the number of dependencies of our Edge API impact the latency of the system?

The math is a bit trickier.

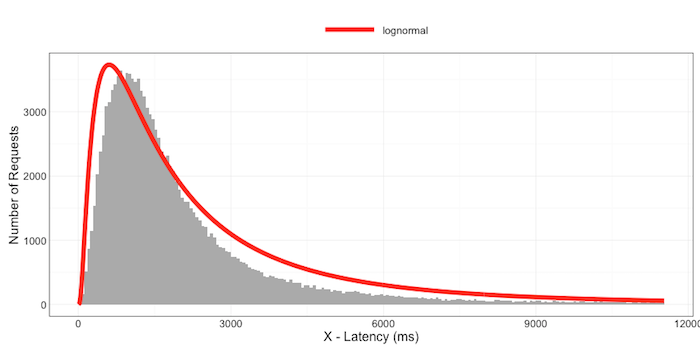

We can approximate the latency distribution of our components with a log-normal or a gamma distribution.

The median latency of the whole system can be approximated as the sum of the median latency of its components.

If each component in our toy model has a median latency of 50ms (including the network roundtrip), Edge API will have a median latency of 250ms.

The estimate improves a bit for tail latencies: the p99 will be less than the sum of the p99 of the individual microservices. This makes intuitive sense: a request has to be very unlucky to fall into the long tail of latencies for all services in the call tree.

Caching Breaks The Dependency Chain / Part 2

The reasoning we applied to availability is equally relevant when it comes to latency: a cache allows us to remove a whole subset of the call tree.

Let's assume that the median latency of each service is 50ms, including the cache.

Without a cache, Edge API's median latency is ~250ms.

If we cache A's responses Edge API's median latency becomes ~150ms (Edge API + B + cache).

If the cache is an order of magnitude faster than an RPC (e.g. in-memory), we can go down to ~100ms - more than twice as fast of what we started with!

What Cache Should I Use?

We determined that the number of components in the hot path has a negative impact on the availability and latency of a microservice system.

Caching on the client-side, if the hit ratio is high enough, is an effective mitigation strategy: it allows us to skip entire subsets of the call tree, significantly improving the availability and the speed of the system.

We will look at three different types of caches: local, distributed, hierarchical.

The Scorecard

For each cache type we will look at:

- ease of operation - do I need to add new components to the system?

- speed - how long does it take to retrieve or set a value in the cache?

- availability - how much does it improve the overall availability of the system?

- observability - how easy is it to reason about the state of the system?

Caching Types

Local Cache

The client keeps a copy of the responses returned by the server on the same machine it is running on.

The data can either be stored in-memory or spilled to disk if the size requires it.

Ease of operation - A local cache can be as simple as Java's ConcurrentHashMap or Rust's RwLock<HashMap<Key, Value>>.

Speed - Local caches are fast: a cache hit is a few orders of magnitude faster than retrieving data directly from the server.

Availability - What happens if the server is down/experiencing degradation?

If the cache is populated with all the responses it needs (warm cache), no problem - the client is not impacted by issues on the server.

Local caches, though, are local (duh): when we launch a new replica of the client its local cache starts completely empty (cold cache). The new client replica cannot populate the cache if the server is unavailable, therefore it experiences degradation.

Observability - It is not straight-forward to inspect the entries on a local cache, especially if memory is used as storage. We have a consistency issue as well - different replicas of our client might be holding different values in the cache for the same key. This is particularly relevant if the data changes often, less so if it is mostly static or slow-moving.

Distributed Cache

The client stores a copy of the responses returned by the server on a specialised remote server (e.g. Redis, Memcached).

Ease of operation - You need to operate another system on top of your applications, with its own failure modes and quirks. You can mitigate the risk by relying on a managed solution operated by the project maintainers or a public cloud provider.

Speed - We need to perform a remote procedural call to access or set a cache entry. The remote cache will be usually retrieving the value from memory, so it should still be noticeably faster than retrieving the data directly from the server.

Availability - All client replicas are accessing the same remote cache.

If the remote cache is warm, existing and new client replicas can keep operating without disruption even if the server is degraded.

Observability - An operator can easily connect to the remote cache to inspect the stored entries. By using the same remote cache we are also guaranteed consistency across different client replicas.

Hierarchical Cache

Each client replica keeps a local cache as well as a remote cache, used as fallback.

In pseudo-code:

if local_cache_hit(request):

return get_from_local_cache(request)

else:

if remote_cache_hit(request):

return get_from_remote_cache(request)

else:

response = call_a(request)

set_local_cache_in_background(response)

set_remote_cache_in_background(response)

return response

This setup is similar to what happens in a CPU cache.

Ease of operation - You need to operate both a local and a remote cache.

Speed - On the happy path, it is as fast as a local cache. If the local cache is cold, a cache hit on the remote cache is still faster than a call to the server.

Availability - All client replicas can fall back to the same remote cache if their local caches are cold.

If the remote cache is warm, existing and new client replicas can keep operating without disruption even if the server is degraded.

Observability - An operator can inspect the entries in the remote cache, not the local ones. We are also reading from the local cache first: for a short time span different replicas of the client might return a different value for the same cache key.

Summary

If speed is your primary concern, a local cache is the way to go.

If your mainly concerned about availability, look into a remote cache.

If you want the best of both worlds, check out a hierarchical cache.

Cache Hit Ratio Vs Freshness

To maximise the usefulness of a cache we need a high rate of cache hits.

Each entry in our cache has a time to live (TTL): how long should we keep reading from the cache instead of checking the source of truth?

If you set a long time to live for your cache entries (e.g. 24 hours) you will boost your cache hit ratio. But you will probably end up reading stale data.

If you set a short time to live you will maximise the freshness of your cache entries, but your availability and latency will suffer - you are calling the server more often.

Are we forced to choose between freshness and resiliency?

There are a few strategies we can use to get the best of both worlds.

Proactive Invalidation

Event-driven architectures are becoming increasingly more common these days.

What if the server emits an event every time a resource is updated?6

The client can listen to those events and evict the corresponding cache entry every time it gets notified of a change.

The next time that particular resource is required the client fetches it again, directly from the server.

Events are amazing to get the most of our cache without compromising on data freshness: we can set a long TTL knowing that stale entries will be proactively invalidated as a reaction to the events emitted by the server.

Background Refreshes

What if the server does not emit events? Are we out of options?

There is another trick you can use: refreshing entries in the background.

Let's look again at the "naive" approach to caching:

if cache_hit(request):

return get_from_cache(request)

else:

response = call_a(request)

set_cache_in_background(response)

return response

We only call the server on cache misses.

We could use a different strategy - trigger a call to the server (in a background task) even on cache hits:

if cache_hit(request):

let response = get_from_cache(request)

refresh_cache_in_background(response)

return response

else:

response = call_a(request)

set_cache_in_background(response)

return response

Every time we refresh a cache entry its TTL is reset: our data is less likely to be stale and, at the same time, we maximise how long our clients can survive a degradation of the server.

There is another positive side-effect: we are less likely to have cache misses in the hot path, therefore leading to a lower (and uniform) latency profile for the client.

There is a catch though: we are putting more load on the server.

You can mitigate the issue by avoid a refresh on every cache hit. You can choose to refresh an entry every X cache hits or based on how much time is left before it expires.

Summary

We quantified when (and how) caching can be used to boost performance and improve the resiliency of a microservice system.

We examined different cache types: local, distributed, hierarchical.

We explored a few additional techniques to get the most out of your caching.

You should now be empowered to make an informed decision on caching for your own systems.

Caching is only one of the possible options - there are other relevant techniques such as retries, read models, redundant requests. Keep exploring!

Footnotes

When computing the availability of an API server we only consider well-formed requests. Let's consider a REST API, for example: if a server receives a POST request whose body is missing a mandatory field it will return a 400 Bad Request status code back to the caller. Does that mean that server is unavailable? Absolutely not: it must reject invalid inputs, it is behaving as expected. That's why we do not consider invalid requests in our definition availability. Alternatively, you could broaden your definition of "successful request" to include rejecting invalid inputs (e.g. 400s) - both approaches lead to the same result in the end.

We are working under the assumption that the network is reliable - one of the well-known fallacies of distributed system. You can easily extend the model to factor the risk of network failures - or you can simply assume that remote dependencies are a bit less reliable than Edge API to account for the risk of communication over the network.

In real world systems failures are often correlated. For example, you might be hosting multiple logical databases on the same physical server (multi-tenancy). A spike in traffic affecting one of your services results in increased load on the database, so high to affect the throughput of the other co-hosted logical databases. You now have failure spikes in multiple components, leading to widespread degradation across the board. We can always refine our models to make correlation sources explicit, but you'd be surprised by the many different ways systems can end up being coupled at the various levels of the stack.

We are assuming that we never experience a failure when retrieving a value from the cache. This is reasonable for an in-memory cache or, generally speaking, when the availability of the cache is an order magnitude higher than the availability of our services. You can add a $p_{\text{cache_fail}}$ to the model to get a more precise approximation.

The median is actually the 50th latency percentile.

For the purpose of cache invalidation, we do not care about what changed. We just need to know that it changed! We can therefore work with both event notifications and event-carried state transfers.