Taking ML to production with Rust: a 25x speedup

- 3075 words

- 16 min

If we look at the big picture, butchering all the little details, there are two constants in Machine Learning development:

- model training;

- making predictions (inference, for the cool kids in the back of the room).

Today the language of choice for Machine Learning is Python (unless your working environment has some unusual constraints).

I will take you on a journey.

Hopefully, at the end of it, using Rust as a training backend and deployment platform will not look as crazy or confusing as it sounds. (Title aside, there is much more than speed to it)

Why Python?

We could spend pages discussing different workflows for ML development, but it is fairly non-controversial to say that model training usually happens in a fairly explorative way. You have a set of data, you slice and dice it to get a better understanding, trying different approaches along the way to solve the particular problem you have set your eyes on (identifying kitties in Google Street View pictures? Weather forecasting? Crop yield optimisation? You name it!).

There will be many pitfalls along the way and most of the techniques you end up trying will not work out of the box, hence the focus on fast prototyping and iterative improvements.

This is an ideal usecase for a dynamic programming language like Python.

Even more so if you consider that a significant portion of ML practioners has a background in Statistics, Mathematics, Physics or similar degrees instead of Computer Science - they (me ✋!) have little to no training when it comes to software engineering practices and tools.

While Python supports both functional and object-oriented patterns, you can hit the ground running with its scripting capabilities using an imperative style. The barrier of entry is quite low and Python grows with you as your experience and mastery deepens.

Ease of use alone will not take you far though: training ML models requires a fair amount of heavy number crunching and Python is definitely not the fastest language out there.

That is where NumPy (1995 / 2006), SciPy (2001), Pandas (2008) and Scikit-learn (2007) enter the scene. Python wouldn't be where it is today without such a high-quality and comprehensive toolkit for ML and scientific computing.

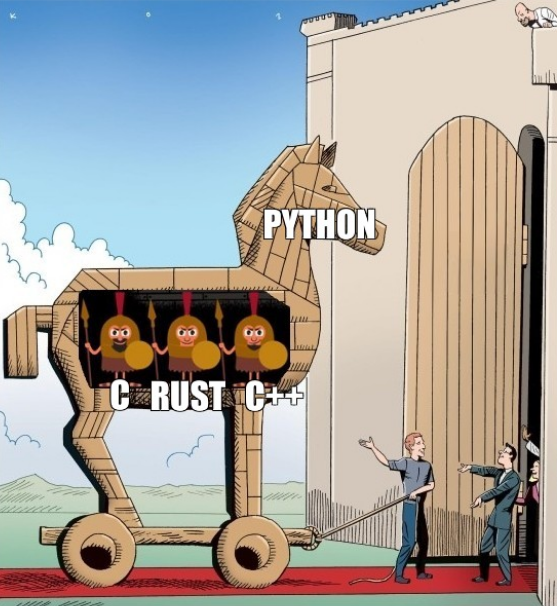

Nonetheless, if you look behind the surface, there is not that much Python in there: you are using Python to orchestrate and leverage a powerful core of C and C++ routines.

Python is the frontend of these systems, the user interface that makes it easy to stitch things together. C and C++ are your backend, the sprinkle of magic behind the scenes.

Indeed, this the often overlooked feature of Python: it is reasonably easy to interoperate with other programming languages using its Foreign Function Interface (FFI). In particular, Python libraries can delegate to C and C++ the bits of a program that require intense number crunching - this is the strategy used by all the foundational libraries in the Python scientific ecosystem.

Technology, of course, never tells the whole story. The sociological elements are crucial to the success (or demise) of most projects, even if some people find it hard to swallow.

We should thus add to the mix that Python was an opensource project (hi MATLAB!), its penetration level in academic institutions was non-negligible as well as the fact that most of its scientific ecosystem was already established when Deep Learning conquered the spotlight.

In hindsight, it is not surprising to see Python as a strong candidate in the ML space, which it eventually dominated.

Should we use Python tomorrow?

We have briefly run through some of the characteristics that enshrined Python as the preferred programming language for ML development.

The world does not stay still though: changes to the surrounding landscape can significantly shift the perception of which one is the "best tool for the job".

Some of the latest trends have, if possible, strengthened Python's position in the space.

Microservices

The microservice architecture currently dominates the design space: companies run their businesses as a loose collection of containerised services communicating with each other over the network.

Running a polyglot stack has never been easier: your main application, sancta sanctorum of your business logic, can be written in Java - when you want to leverage ML to decide if a certain credit card transaction is legit or fraudalent you can make a POST request to a Python microservice.

Gone are the days where Data Scientists and Machine Learning Engineers performed their model exploration in Python before handing everything over to the "production team" for a full rewrite in the company's language of choice (thankfully).

DevOps

You build it, you run it - Werner Vogels (Amazon CTO)

If we are talking business, it's important to stress that ML models do not exist in a vacuum: they are part of a product or a process that a company wants to launch, optimise or improve.

It is thus fairly naive to believe that a team composed exclusively of Data Scientists has a good shot at succeeding. You need way more than that.

You need a mix of different skillsets, from product to software engineering, if you want to have a chance to succeed.

What programming language should such a team use?

Remember the rise of JavaScript: using JavaScript and NodeJS empowered the same people to work on both the frontend and the backend of a system ("fullstack").

Python, being a general purpose programming language, offers the same convenience. You can use its scientific stack for ML development and leverage its frameworks (Django, Flask, FastAPI) for model deployment, providing predictions behind a REST or gRPC API.

Sweet, isn't it?

Network effect

- Python has a huge ML ecosystem;

- You want your ML algorithm or ML framework to be adopted: you write it in Python (or you provide Python bindings for it using FFI);

- The Python ecosystem just got bigger.

Rinse and repeat.

The answer

We will probably still use Python tomorrow to write ML software.

Will we use it forever? Unlikely, it's like asking ourselves what the future of computing will look like 10 years from today.

But I would be wary of betting on Python's sunset in the next 5 years.

So what? Wasn't this a Rust article?

It is indeed!

But it was important to clean the air of any possible misunderstanding before getting started.

I don't believe Rust will replace Python as the language of choice for ML - it just isn't happening, it's not a thing, neither today or tomorrow.

They don't cater to the same crowd and they are optimised to solve a different set of problems under different constraints.

But Rust has a part to play in the ML world.

Rust has huge potential to replace C and C++ as the preferred Python's backend for ML workloads.

Why Rust?

There is no better answer than what the book foreword states:

Take, for example, “systems-level” work that deals with low-level details of memory management, data representation, and concurrency. Traditionally, this realm of programming is seen as arcane, accessible only to a select few who have devoted the necessary years learning to avoid its infamous pitfalls. And even those who practice it do so with caution, lest their code be open to exploits, crashes, or corruption. Rust breaks down these barriers by eliminating the old pitfalls and providing a friendly, polished set of tools to help you along the way. Programmers who need to “dip down” into lower-level control can do so with Rust, without taking on the customary risk of crashes or security holes, and without having to learn the fine points of a fickle toolchain. Better yet, the language is designed to guide you naturally towards reliable code that is efficient in terms of speed and memory usage.

Rust provides performance on par with C and C++ with a completely different level of confidence.

You trust the compiler to know what you don't know: you have, in other words, a safe route from "What the fuck is this?" to "Let's run this code in production!".

This lowers the barrier to entry dramatically.

Way more people (me again ✋!) can have a go at writing high-performance ML algorithms.

Way more people have a shot at contributing to the backends of those projects they are using on a daily basis.

This leads to a bigger community, more experiments, more sustainable projects - in other words, a healthier and more diverse ecosystem.

Going back to those trends I mentioned before, you can spot again the power that comes with being fullstack: the same person who took care of the model exploration (in Python) can deep dive and optimise the final solution using Rust to rewrite its hot path.

How easy is that though, in practice?

An experiment: clustering

I prepared a workshop for RustFest 2019: we implementend from scratch K-Means clustering using ndarray, a Rust equivalent of NumPy.

I wrote some notes on the workshop a couple of weeks ago and the material can be found on GitHub: it's structured as a series of test-driven exercises, with each step contributing to the final solution.

I couldn't silence the question: how fast was a teaching implementation of K-Means in Rust compared to the one offered by scikit-learn?

I spent both implementation Days of RustFest with a bunch of other curious people to provide an answer. Without @sitegui, @dunnock and @ThomAub it would have taken way longer: thanks a lot for your help!

The implementation

I have released a cleaned up K-Means implementation as a Rust crate, linfa-clustering. linfa-clustering is a subset of linfa - we'll talk more about it later.

As you can see from the source code, the focus is on clarity rather than obscure optimisations: it's a textbook implementation of Lloyds' algorithm.

Most speed up opportunities have not exploited and there is definitely room for profiling and polishing - e.g. it only leverages multithreading for the assignment step, while the update step is single threaded.

To perform an apple to apple comparison, I wrote Python bindings for it: linfa is on PyPi as a Python library.

In particular, I was interested to compare:

- training time;

- inference time, as measured when the model is exposed as a gRPC microservice.

Measuring how long it takes to provide predictions exposing the model as a microservice takes us way closer to what it would actually look like to use this code in a real production environment.

Instructions, results and code to reproduce the benchmarks is available on GitHub.

Training benchmark

Using pytest-benchmark, it seems that linfa (Python wrapper) is ~1.3x faster than scikit-learn in training a K-Means model on a dataset of 1 million points.

| Library | Mean training time (ms) |

|---|---|

| Linfa (Python wrapper on Rust) | 467.2 |

| Scikit-learn | 604.7 (1.3x slower) |

Overall, their speed is comparable - linfa is probably slightly faster due to the parallel assignment step.

If you find this underwhelming, think twice: we are comparing an implementation put together in two days for a teaching workshop with the implementation used by the most well established ML framework out there.

It's insane.

As you can see from the benchmark code, linfa K-Means implementation provides a scikit-learn-like interface.

from sklearn.datasets import make_blobs

import pytest

from linfa import KMeans

from sklearn.cluster import KMeans as sk_KMeans

@pytest.fixture(scope="session", autouse=True)

def make_data():

return make_blobs(n_samples=1000000)

def test_k_means_rust(benchmark, make_data):

dataset, cluster_index = make_data

model = KMeans(3, max_iter=100, tol=1e-4)

labels = benchmark(model.fit_predict, dataset)

assert len(labels) == len(cluster_index)

def test_k_means_python(benchmark, make_data):

dataset, cluster_index = make_data

# Using the same algorithm

model = sk_KMeans(3, init="random", algorithm="full", max_iter=100, tol=1e-4, n_init=1)

labels = benchmark(model.fit_predict, dataset)

assert len(labels) == len(cluster_index)

I'd like to give you a taste of the Rust version as well - the interface looks slightly different (for reasons I will probably talk about in another blog post), but you can easily recognise the same steps:

use linfa::clustering::{generate_blobs, KMeans, KMeansHyperParams};

use ndarray::array;

use ndarray_rand::rand::SeedableRng;

use rand_isaac::Isaac64Rng;

fn main() {

// Our random number generator, seeded for reproducibility

let mut rng = Isaac64Rng::seed_from_u64(42);

// For each our expected centroids, generate 1000 data points around it (a "blob")

let expected_centroids = array![[10., 10.], [1., 12.], [20., 30.], [-20., 30.]];

let dataset = generate_blobs(10000, &expected_centroids, &mut rng);

// Configure our training algorithm

let n_clusters = 4;

let hyperparams = KMeansHyperParams::new(n_clusters)

.max_n_iterations(200)

.tolerance(1e-5)

.build();

// Infer an optimal set of centroids based on the training data distribution

let model = KMeans::fit(hyperparams, &dataset, &mut rng);

// Assign each point to a cluster using the set of centroids found using `fit`

let labels = model.predict(&dataset);

}

Inference benchmark

As mentioned before, serving ML models using a dedicated microservice is quite an established pattern in the industry.

Very often though, there is little to no business logic inside these microservices: it's nothing more nothing less than a remote function call.

Given a serialised ML model, could we entirely automate/abstract away the API generation? I definitely think so, as the rise in popularity of Tensorflow Serving confirms.

I have thus decided to benchmark three scenarios:

scikit-learn's K-means served on Python's gRPC server;linfa's K-means (Python wrapper) served on Python's gRPC server;linfa's K-means (Rust) served on Rust's gRPC server (tonic).

I haven't performed any sort of fine tuning on these gRPC web servers: we are looking at out-of-the-box performance. Once again, I invite you to have a look at the source code (Rust / Python) - no dragons in there.

linfa on a Rust web server handles 25x more requests per second than scikit-learn and 7x more than linfa (Python wrapper) on a Python gRPC server.

The same holds for latency (how long it takes to provide a response), where linfa on a Rust web server is consistently 26x faster than scikit-learn and 7x faster than than linfa (Python wrapper) on a Python web server.

linfa on a Rust web server has also the lowest error rate under heavy load.

A new workflow

It's too small of an experiment to draw drastic conclusion and I am sure you can find faster implementations of Lloyds' algorithm for K-Means out there.

But I hope it was enough to convince you that Rust could play a part in ML development. Everybody could have written that Rust implementation with a bit of training in how ndarray works (try the workshop material!) - how much potential is currently locked in many ML practitioner due to the barrier of entry to use C and C++?

If that is not enough, Rust goes beyond replacing C and C++ as Python backend - Rust can leverage its growing async ecosystem to take care of the deployment piece.

It could be as easy as:

- identify your model candidate using a Rust-powered Python library;

- serialise the final model;

- provide the path of the final model and the expected schema of your input data as configuration;

- profit.

Definitely an idea worth exploring in 2020.

Going forward

As I briefly mentioned before, linfa-clustering is a subset of linfa, a general ML framework in Rust that I plan to focus on in 2020.

Even calling it a framework, at this point in time, is premature: linfa-clustering is indeed the only piece in there 😀

There is a long way to go to fulfill its bold mission statement, but there is significant lurking interest in the Rust ecosystem when it comes to ML and its surroundings: sometimes a small spark is all you need to light a beacon fire.

In fact, it is a firm belief of mine that only a significant community effort can nurture, build and sustain an ML ecosystem in Rust - there is no other way forward.

The Rust ecosystem is indeed rich in ML crates - just take a look at what a quick search for machine learning on crates.io returns.

No need to go and rewrite everything from scratch: I picture linfa as a meta-package, a collection of curated algorithm implementations from the Rust ecosystem. The first stop for your ML needs, as scikit-learn for Python.

If something is missing, we will write it.

If something is already available and it can be exposed with a compatible interface, we will adopt it.

Coming up with a set of traits could indeed be one of the most significant contributions - but first we must explore, then we will abstract.

If this strikes a chord with you, please take a look at the roadmap - I am looking forward to your contributions!

Notes, suggestions and feedbacks on this article are more than welcome: you can reach me on Twitter at @algo_luca, GitHub at @LukeMathWalker or email [email protected].