ML: Time to Embrace Version Control

- 1959 words

- 10 min

At data science meetups there is a quite recurring horror story: projects where code and data were passed around between teammates (and clients) using emails with zip files attachments.

If you have ever worked on a PowerPoint presentation before the Google Slides' era, you know what I am talking about. The most common source of issues in those situations is versioning: knowing how each zip file is related to the other ones in order to understand what has been changed by whom and, more importantly, on what version of the project you are supposed to be working on.

Those horror stories usually continue mentioning the most advanced versioning convention known to the human race - manual filename tracking: project_v1.zip, project_v2.zip, project_v2_bis.zip, etc.

Please have mercy, we can surely do better than that.

Especially if you have invested several thousands pounds launching a Data Science project or building up a Machine Learning team. You have to.

As it is often the case, other people have been there before us and they have come up with smart solutions to our problems: software developers. They might be slightly out of fashion nowadays (they ain't doing the AI thing that everyone talks about!) but they had to find a way to cooperate effectively on software projects a couple of decades before Data Scientist was even considered a legit job title.

It wasn't an easy ride, but the software development community eventually adopted version control software as the preferred way to manage and coordinate team contributions to a software project. The most famous tool in this space is certainly git, followed at a distance by mercurial.

Version control?

The core idea is quite simple: every time someone wants to contribute to the project with some changes, they bundle them together in a commit and that contribution is added at the end of the project history. Every commit is an atomic unit of contribution and the history is nothing more than an ordered sequence of commits - it allows us to observe the status of the project at any point in time: from the first prototype to the latest production change, it's simply a matter of choosing what commit to look at.

Reality is a little messier, with branches and merge conflicts, but that has more to do with the ergonomics of using a (distributed) version control software as well as team dynamics.

What matters to us is that we can ask questions such as:

- when was bug X introduced?

- who was the last one to change line Y?

- what did line Y look like before the change?

- what code was in production two months ago when [castrophic event] happened?

And this is possible because we have a single source of truth for code - our version control history.

Application code is not enough

Suppose that you are building a website - a blog, to be more specific.

You have designed your application using the latest JavaScript framework and it runs smoothly on your machine when you test it out on localhost. But that is not enough to make it visible and available to the world!

You will have to get yourself a server to host the website, you will need to register a domain with a DNS service and more often than not you will also have to spin up some kind of database that your application will be talking to.

How do you ensure that all these pieces will work together and run smoothly?

You might have forgot to freeze your dependencies in the JavaScript application: when you install them on the server you get more recent versions of a couple of packages that contain a bug that crashes your blog. Bad!

You might be using a slick MacBook Pro as your workhorse machine, but your server will probably run some flavour of Linux - another source of trouble. Bad!

Your database might be communicating with your application just fine when running both of them on localhost, but you forgot to set the proper permissions to make the servers hosting them communicate with each other - now your live blog can't reach its database and all posts/comment sections are empty. Bad!

There is a recurring pattern: all our code lives in a version control repository and we have made sure that it works (unit tests, acceptance tests, etc.) but there is a whole universe surrounding an application in production that is not accounted for and that is equally likely to cause malfunctioning in our software project. This universe is not captured in our version control system. How do we solve it?

You version everything.

How do you do that? You start by encoding environment, configuration, infrastructure and processes as code - there are a number of tools to make this possible, ranging from Docker containers for reproducible environments to Terraform modules for reproducible infrastructure, as well as continuous deployment tools to make sure that what lives in the version controlled repository gets properly deployed in the right environments (production, development, etc.). Processes need to be store as code as well.

The question you need to ask yourselves, as this article suggests, is:

Can I recreate a specific version of the complete software system — with infrastructure, data, software, and configuration — by running one command that gets a specific revision from my version-control system?

If you cannot, you're not versioning everything. If you're not versioning everything, you cannot consistently reproduce your application.

That has nefarious far-reaching consequences, and I'd dare to say that it is the root cause of a lot of technical debt, complexity and failures when it comes to Machine Learning systems.

Why is Machine Learning so special?

Data is the key differentiator between a Machine Learning project and a traditional software project: even if everything else stays stable, changing the data your models are trained upon makes a huge difference. And data, due to its size and nature, is very difficult to capture in version control systems such as git.

We can gather further insights looking at how a Machine Learning project works.

We can usually identify two different phases:

- Prototyping - different setups (algorithms, hyperparameters, data sources, preprocessing strategies) are tested out until the most promising solution is identified to solve the business problem at hand;

- Production - the ML model is deployed alongside all the relevant machinery required to make it work (a serving API, some ETL pipelines, etc.).

Training, unless you are dealing with some online-learning model, usually happens before a model gets deployed, in the late prototyping phase. It is an artifact generated from the combination of a certain number of data sources and the code used to process and learn from them.

The prototyping phase thus influences directly what happens in the production phase - reproducing a training run becomes extremely relevant once one of the models is deployed to production and something goes wrong.

To ensure training run reproducibility, we should keep track of:

- data provenance or data lineage - where does this dataset comes from (Is it the outcome of a database query? Is there an original raw dataset? Is it the result of script run on top of another dataset?);

- experiment setup - where do we run our training? Is the environment reproducible? Can we associated the data that have been used to the resulting model we have trained?

- freshness - are we sure that every time we change a step in our data processing pipeline we have recomputed all stale intermediate datasets before running another training session for a model?

- logging - how do we keep track of results?

All of these is necessary if you want to be able to go back and take a look at every little step you took to produce that serialized model in order to nail down what went wrong where - to debug it! (Or to audit it, if you work in an industry that requires it)

If everything is captured in version control then all the things we have mentioned so far can be achieved - reproducibility for Machine Learning systems becomes feasible.

The state of the Machine Learning in 2018

I'd like to have something similar to the State of DevOps report for Machine Learning in the industry, but as far as I know we don't have an equivalent publication.

Inferring from what I have been able to witness and read, I am fairly confident that, apart from a handful of well known tech companies (looking at you, Uber's Michelangelo), we are not even to close to this level of rigor in designing Machine Learning systems.

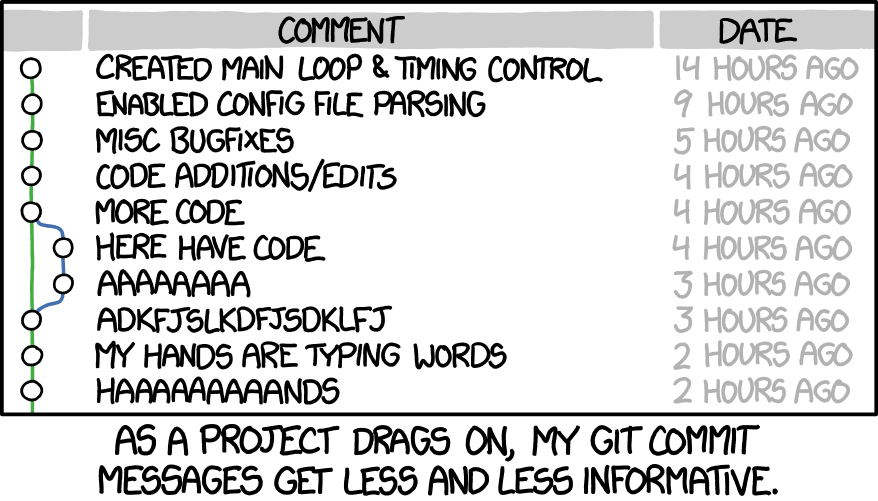

We haven't even agreed on a proper set of best practices: a lot people are not even using version control for their code, and don't get me started on Jupyter Notebooks...

The root causes

There is a cultural component ("this is software engineering shit, why should I care? I am a scientist!"), it can't be denied, but at the same time we can't underestimate the technical obstacles that practitioners have to overcome to achieve a fully reproducibile machine learning workflow.

Putting every piece involved in a Machine Learning project under version control, today, is hard.

Speaking from a Python point of view, I'd say that:

- we don't have a proper system to manage data dependencies in the same way we manage code dependencies;

- data cannot be version controlled using

gitand when you inherit a project repository you need to search fors3links to understand where the data comes from (some cool projects are coming out in this direction, such as lazydata); - certain algorithms (e.g. neural networks) require custom hardware but Continuous Integration tools such as CircleCI or TravisCI do not support out of the box remote execution of pipeline steps - it becomes quite cumbersome to launch a training job on a Cloud provider server and then execute logic based on the results (I have tried). It thus becomes difficult to make training a part of the CI pipeline that runs before deployment - this weakens the

source of truthstatus of your version controlled repository; - managing infrastructure as code requires a lot of knowledge that is usually not available in a machine learning team. We need tools that abstract away the complexity of it while empowering us to customize what matters for our workloads. dotscience, Valohai and comet.ml are definitely worthy candidates in this space.

These tools need to mature and be widely adopted, as well as integrate properly with version control tools already in use for code, such as git. But that is not enough.

How do we fix it?

We need data scientists and machine learning specialists to understand that software lies at the heart of the systems we work with and we need to adopt all the best practices that have been developed over time to deal with it. We have surely inherited all the issues, so we'd better pick up the good bits as well!

There is a LOT OF WORK to be done to replicate for Machine Learning systems what DevOps has done for Software Development.

Do we want to call it DataOps or MLOps? We might.

What is certain is that is time to roll up our sleeves.